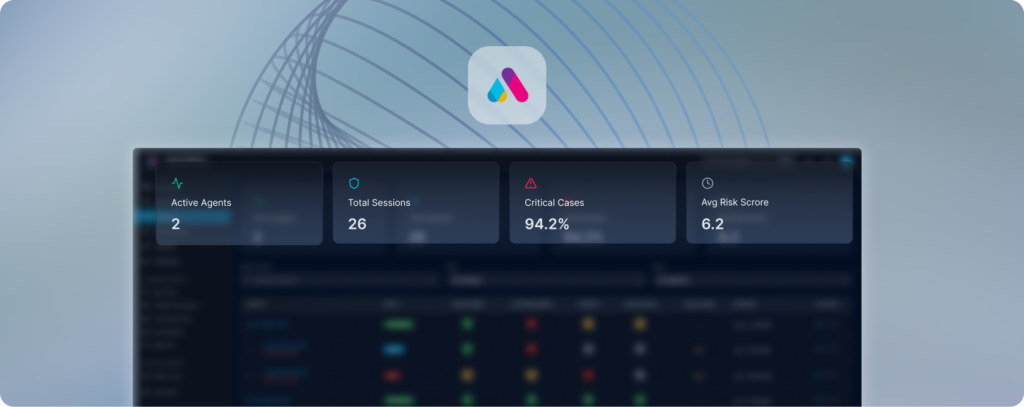

Agent Assure is how financial services firms assure their AI agents before and after deployment.

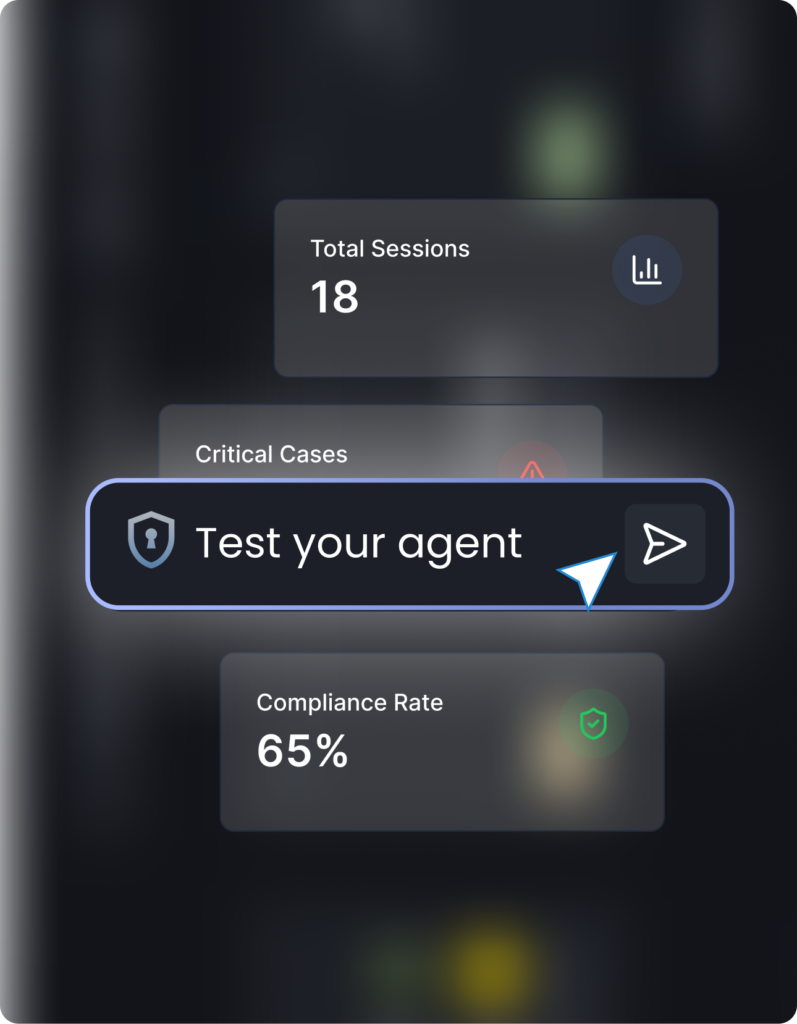

Use our Agent Assure Sandbox to simulate journeys, detect risk, and generate an explainable assurance report, before your agent reaches customers.

Firms are moving fast from copilots to agents, but traditional assurance methods (sampling, manual QA, retrospective monitoring) can’t keep up.

If you can’t evidence control, you can’t scale deployment.

Firms are moving fast from copilots to agents, but traditional assurance methods (sampling, manual QA, retrospective monitoring) can’t keep up.

If you can’t evidence control, you can’t scale deployment.

The assurance layer for agentic AI

Your pre-deployment safety test for AI agents

A controlled environment to test agent behaviour before launch.

Run simulated customer journeys and edge cases

Identify unsafe behaviours and missing disclosures

Generate an Agent Assurance Report with evidence traces

Iterate and retest before go-live

Structured findings and severity

Guardrails, monitoring, escalation rules

Why it was flagged, what triggered the judgement

What’s safe now vs. what needs work

Apply for Early Access to the Agent Assure Sandbox.