When advisers talk about AI, their hesitation isn’t about change. Rather, it’s about trust. In conversations Aveni has had with advisers, one phrase appears again and again: “I need to know what it’s seeing and what it’s not.”

When advisers talk about AI, their hesitation isn’t about change. Rather, it’s about trust. In conversations Aveni has had with advisers, one phrase appears again and again: “I need to know what it’s seeing and what it’s not.”

That single sentence captures the core issue with generic AI in regulated advice. It is not whether a model can summarise. It is whether an adviser can stand behind its output when compliance calls.

AI without oversight cannot work in financial advice

Advisers operate in an environment where evidence matters more than efficiency. Missing a disclosure, misunderstanding a goal, or miscategorising a vulnerability is not a small oversight. It is a compliance risk.

Firms understand this. Automation that saves time but introduces uncertainty is not progress. Trust in AI comes from control, not convenience.

The human-in-the-loop principle

Aveni’s conversations with advisers show that they want technology to assist, not decide. They want prompts that flag missing information, context they can review, and a clear link between every AI-generated sentence and its original source.

This is what “human-in-the-loop” means in practice: AI handling the routine work while advisers retain judgement and accountability.

At Aveni, that principle guides every design decision.

- Aveni Assist builds transparency into document creation. Every recommendation, fee disclosure, and product rationale is traceable to the conversation or CRM record it came from.

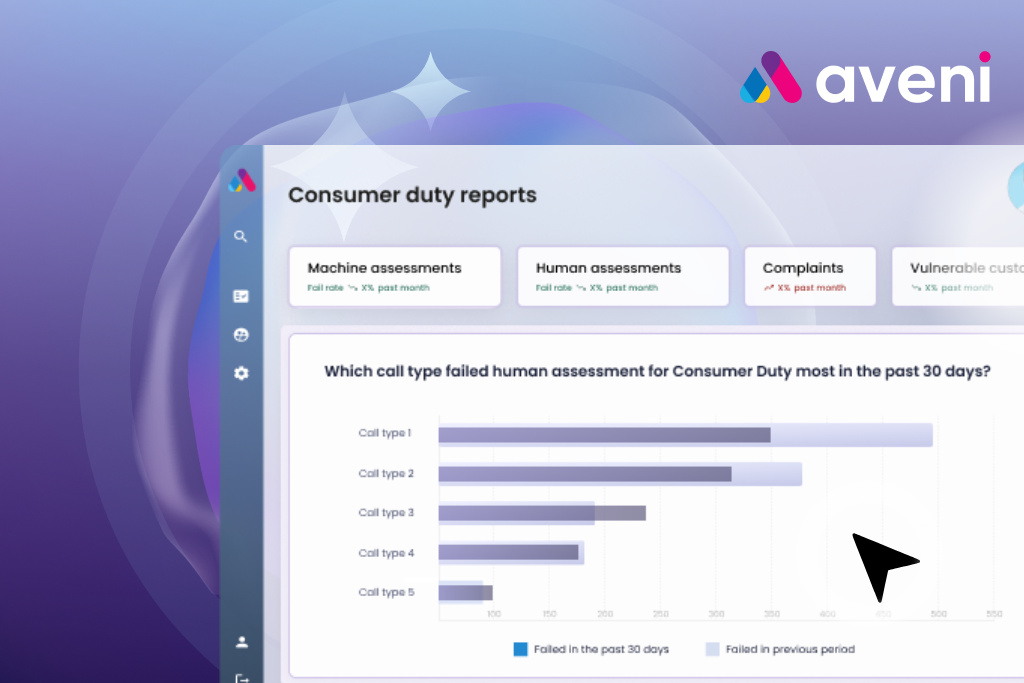

- Aveni Detect enables oversight across thousands of client interactions, surfacing risk patterns early while keeping human review in control.

- FinLLM powers both with models trained specifically for UK financial services, aligned to FCA standards and terminology.

Key takeaway: When advisers can see why AI reached an outcome and confirm it before client delivery, scepticism turns into confidence. Oversight becomes part of the process rather than an add-on.

From scepticism to assurance

When advisers can see why AI reached an outcome, and confirm it before client delivery, scepticism turns into confidence. Firms that include compliance teams early in adoption see faster uptake, because oversight becomes part of the process rather than an afterthought.

AI does not replace human assurance. It strengthens it. That is the difference between tools that assist and systems that are assured.

Listening to hesitation: human oversight in ai financial advice

These concerns matter because they reflect a profession that wants to move forward responsibly. The worry about what AI might miss is not resistance. It is a call for clarity, traceability, and partnership.

As AI becomes part of the advice infrastructure, firms that embed transparency, auditability, and human oversight will earn regulator confidence and client trust.

Discover how human-in-the-loop AI earns client trust

See how Aveni’s platform automates regulated workflows while strengthening compliance oversight