What a viral meme tells us about how AI gets things wrong – and why we should care

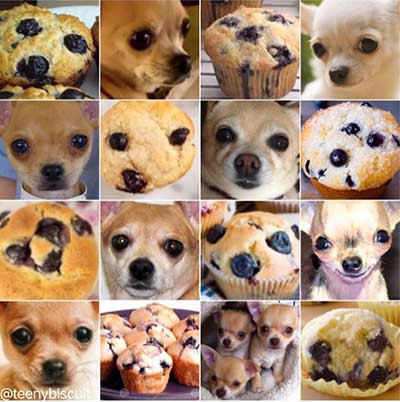

If you’ve spent any time on the internet, chances are you’ve seen the Chihuahua or Muffin AI meme. It’s exactly what it sounds like: side-by-side images of blueberry muffins and tiny dogs, daring you to tell them apart.

At first glance, it’s just a bit of fun. But it also accidentally nails one of the most important challenges in machine learning: how models can come to completely the wrong conclusions and still look like they’re doing a great job.

This matters more than you might think, especially when AI starts getting used in places like healthcare, finance, or the legal system. Getting a chihuahua wrong might make you laugh. Getting a customer’s vulnerability assessment wrong? Not so funny.

Let’s dig into why this happens, and why it’s not just a weird meme problem.

What the Chihuahua or Muffin AI Meme Reveals About Model Failure

Here’s the thing about machine learning: it’s really good at pattern recognition. But it doesn’t actually understand what it’s looking at. It just learns to associate certain features in data with specific outcomes. If you give it enough examples, it’ll start spotting patterns, whether or not those patterns make any sense.

So, take the Chihuahua or Muffin AI example. If you train a computer vision model on enough pictures, it’ll start learning that muffins are often golden brown with dark spots, and that chihuahuas… also sometimes look like that, especially if they’re small and staring blankly into the void.

If your training data isn’t carefully selected and tested, your model might end up making decisions based on completely irrelevant details. It’s not seeing the difference between a baked good and a living creature, it’s just doing pixel maths.

And this isn’t just a hypothetical problem. It’s happened in real life, in far more serious settings.

The case of the wolves, the huskies, and the snow

One of the most famous examples comes from a 2017 paper by researchers at the University of Washington. They built a model to tell the difference between wolves and huskies. Simple enough.

The model did quite well at first, high accuracy, confident predictions. But when they looked a little closer (using interpretability tools to see what the model was actually paying attention to), they found something odd.

It wasn’t focusing on the animals’ fur, snouts, or ears. It was focusing on the background of the images.

Most of the husky photos in the training data had snow in the background. The wolf photos didn’t. So the model learned that “snow = husky”, and made its predictions accordingly. If a wolf was standing in snow, the model often called it a husky. It wasn’t looking at the animal at all, it had just picked up on a lazy shortcut in the data.

That’s what’s known as a spurious correlation – when a model latches onto a pattern that happens to show up in the training set, but doesn’t actually relate to the thing you’re trying to predict.

Why this matters (and not just to dogs and muffins)

It’s easy to laugh at mislabelled muffins or snowy wolves. But the same kind of lazy shortcuts can creep into far more important systems.

Let’s say you’re using machine learning to flag customers showing signs of financial vulnerability, or to monitor compliance in regulated conversations. If your model starts associating vulnerability with, say, speaking slowly or mentioning “bills”, you’ve got a problem.

Maybe it’s not picking up on actual distress, it’s picking up on common phrases or even regional accents that happened to appear more often in your training data. That’s not just a bad guess, it’s a recipe for discrimination, bias, and regulatory risk.

Worse still, these models can be very confident while being completely wrong. And without the right tools in place, you’d never know.

What can we do about it?

At this point you might be thinking: “Is AI just smoke and mirrors then?” Not quite. But it does mean we need to take a more thoughtful approach.

Here’s what actually helps:

- Explainability: We need to be able to understand why a model made a decision, not just whether it was “right”.

- Stress testing: Trying models out on edge cases, unfamiliar data, and deliberately tricky examples to see how they behave.

- Human oversight: No matter how clever the tech, there needs to be a person in the loop, especially when outcomes affect real people.

- Good data hygiene: Clean, diverse, well-labelled training data goes a long way. Garbage in, garbage out, as ever.

At Aveni, a lot of what we do involves figuring out how models make decisions, not just what they predict. Whether we’re building models in-house or working with LLMs from elsewhere, we always want to understand the logic behind the outputs. If we can’t explain it, we don’t trust it. And neither should you.

Final thought

The chihuahua or muffin ai game is funny because it reveals something unsettling: sometimes, it’s genuinely hard to tell what’s what. And if you, a human with a lifetime of visual context and common sense, can be fooled, what chance does a model have without proper guardrails?

So next time someone tells you their AI model is 95% accurate, ask them how it came to those answers. Ask what it’s really looking at. Ask if it could tell a muffin from a mutt in the snow.

If they can’t explain it… well, maybe it’s time to take a closer look at the ingredients.